My AI Budget Is Burning Cash

Here's How I'm Planning on Fixing It with The AI Velocity Framework.

Let's be honest. The AI hype in marketing is loud. Every marketing leader, whether you're running a department in a B2B tech company, a traditional business, or steering an agency like me, is feeling it: the pressure to jump in, the FOMO, the whispers about competitors crushing it with new tools.

But in those quiet moments, the real questions I keep asking myself are: "Okay, but... how do I actually know this AI stuff is working? How do I show our clients, or internal stakeholders that these tools are making us faster, sharper, and actually hitting the mark?"

Because here’s the rub: AI promises speed, efficiency, and results delivered yesterday. Awesome. But proving that in the messy, deadline-driven, stakeholder-juggling reality of modern marketing? That’s tough.

Old-school ROI, just looking at cost savings, feels like it just doesn’t capture the whole picture. What we really need is a way to measure velocity: how fast AI helps you ship deliverables, slash inefficiencies, and, crucially, get your business or your clients to the 'aha!' moment faster.

In thinking through this, I decided to work on a practical playbook for building that measurement engine. I'm calling it our “AI Velocity Framework”. I’m trying to move past theory in my own business and define some concrete metrics that I can actually make decisions on and steps I can actually use with the goal of quantifying the real momentum AI brings to our marketing efforts.

The AI Arms Race: Why Measurement Isn't Optional Anymore

Before I get into it, I think it’s important to look a bit deeper into why measurement is so pressing right now. So, let’s look at what’s driving this pressure for me and others like me.

According to CoSchedule, a massive 85% of marketers are already playing with AI for content creation, and over half (56%) of companies are actively rolling it out as part of a planned strategic initiative. Makes you wonder what the other 44% are thinking.

And surely in many of those companies, execs are poking their marketing teams, asking, "So, what's our AI plan?"

The message is clear: knowing your way around AI is shifting from 'nice extra skill' to 'basic job requirement.' It's becoming table stakes just to compete, no matter your business model. (See my discussion with with Jacob Bank on the profile of the new marketer in the age of AI. It’s enlightening.)

But just having the tools isn't winning the game. The real flex? Proving they actually work. Or maybe even better, beating everyone else in realizing the promise of AI. Getting serious about measurement is make-or-break for marketing departments everywhere, and here’s why it’s important here at Mighty & True:

Show Me the Money (Justification): Let's face it, AI isn't free. Tools, training, time, it adds up. Solid measurement will give me the ammo to prove the ROI and set budgets and buy-in across the company. Without it, my AI initiatives look like expensive hobbies, not strategic investments.

Double Down on What Works (Optimization): Not all AI is created equal. Measurement will help me spot the winners; the tools and tactics actually moving the needle, so I can ditch the wasted spend and time and focus my resources where they count.

Stakeholder Communication & Value: Whether you're reporting to internal leadership or external clients or running an agency like me, demonstrating value is paramount. Quantifying how AI speeds up campaigns, nails personalization for better results, improves pipeline velocity, or makes budgets work harder? That’s gold for stakeholder relationships.

Stay Ahead of the Pack (Competitive Edge): In our crowded and competitive market, the marketing organizations who can prove their AI advantage will win. Measurement fuels constant improvement, ensuring we're not just using AI, but using it better than the competition to deliver faster and better business outcomes. Speed to outcome is the goal.

The unique pressure cooker of modern marketing, juggling diverse stakeholders, insane deadlines, shifting goals, and the constant need to prove our worth, makes measuring AI's speed and impact on outcomes extra critical.

It's not just reporting; it's strategy. With everyone jumping on the AI bandwagon and leadership (me) demanding proof, quantifying the benefits is how we’ll stand out.

In a broader sense, teams fumbling the ROI story are going to struggle. Those who nail measurement will confidently be able to assess exactly how AI delivers, where to invest, or divest and how to secure that early and crucial competitive edge.

And here’s a key insight: while everyone initially talks about AI saving costs and efficiency, the real game-changer for marketing teams is often elsewhere; in the outcomes.

For Mighty & True, we’ve already seen our AI investments unlock better targeting, hyper-targeted personalization, faster insights, and quicker optimization cycles. And we’re still in early days.

These aren't just minor internal tweaks either; they directly speed up business wins: faster conversions, quicker lead progression, hitting campaign goals sooner, accelerating pipeline.

For your teams, showing that accelerated business value is way more powerful than just saying you saved a few bucks internally. It’s time to shift how we measure and talk about AI and focus on how it makes all our businesses win, faster.

Speed, Efficiency, Outcomes: Decoding What AI Actually Does for You

Okay, let's break down the buzzwords for The AI Velocity Framework. To measure AI's impact in your marketing world, you need to know what you're measuring. Think of it in three core dimensions:

Speed (Doing Stuff Faster): This is about cutting down the time it takes to get marketing tasks done. For your team, this looks like:

Drafting blog posts, emails, or ad copy in record time.

Getting campaigns out the door quicker.

Spending less time crunching data and more time acting on insights.

Making smarter decisions, faster, thanks to AI recommendations.

Efficiency (Doing More with Less): This is about optimizing your team's resources (time, money, people) to get better results without burning more fuel. Think:

Lowering project or campaign costs, maybe by automating tasks or needing less external support.

Boosting your team's output (more campaigns, more content per person).

Cutting down on errors in things like creative, QA, campaign setup or reports.

Automating the boring, repetitive stuff so your team can focus on the big picture.

Accelerated Outcomes (Getting to the Win Faster): This is the big one. It measures how quickly AI helps you hit those crucial marketing and business goals. This means:

Shrinking the time it takes to turn a lead into a qualified opportunity or sale.

Hitting key campaign targets (like CPA, ROAS, or engagement goals) or internal goals (like MQL velocity) sooner.

Generating those game-changing market or customer insights faster, leading to quicker strategy shifts.

Reaching ROI goals or other financial targets (like pipeline value) in less time.

These three aren't isolated; they feed each other.

Faster task completion (Speed) usually means lower costs or more capacity (Efficiency). And when you strategically use that speed and efficiency, like analyzing data faster for quicker insights, or running tests more rapidly for optimization, you directly accelerate those desired Outcomes.

Now, while Speed and Efficiency are crucial for running a tight ship and boosting profitability, demonstrating Accelerated Outcomes? That should be our superpower.

Businesses invest in results: leads, pipeline, revenue, growth. AI's real magic lies in hitting those targets faster through smarter insights, refined targeting, better messaging, tight personalization, and rapid-fire optimization.

So, my AI Velocity Framework has to prioritize tracking how AI speeds up business wins. AI is not just an internal efficiency hack. It’s a secret weapon for delivering success faster than ever.

Your AI Measurement Toolkit: Metrics That Actually Matter

Alright, let's get practical.

You can't measure AI's impact without the right tools, in this case, Key Performance Indicators (KPIs). And these should not be vanity metrics.

We need SMART goals: I’ll use the SMART system for this: Specific, Measurable, Achievable, Relevant, and Time-bound, tied directly to what I’m trying to achieve with AI for my business and my customers.

Rule #1: Baseline or Bust.

Before we unleash AI on any process or campaign, I must know where I’m starting from. I’ll start by measuring current performance using the KPIs I choose before AI enters the picture.

I think the last 30 days of historical data seems right, or whatever gives me a solid benchmark. Documenting this "before" picture is non-negotiable. It's the only way to truly see the "after" impact.

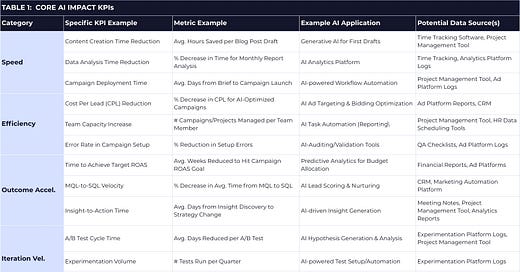

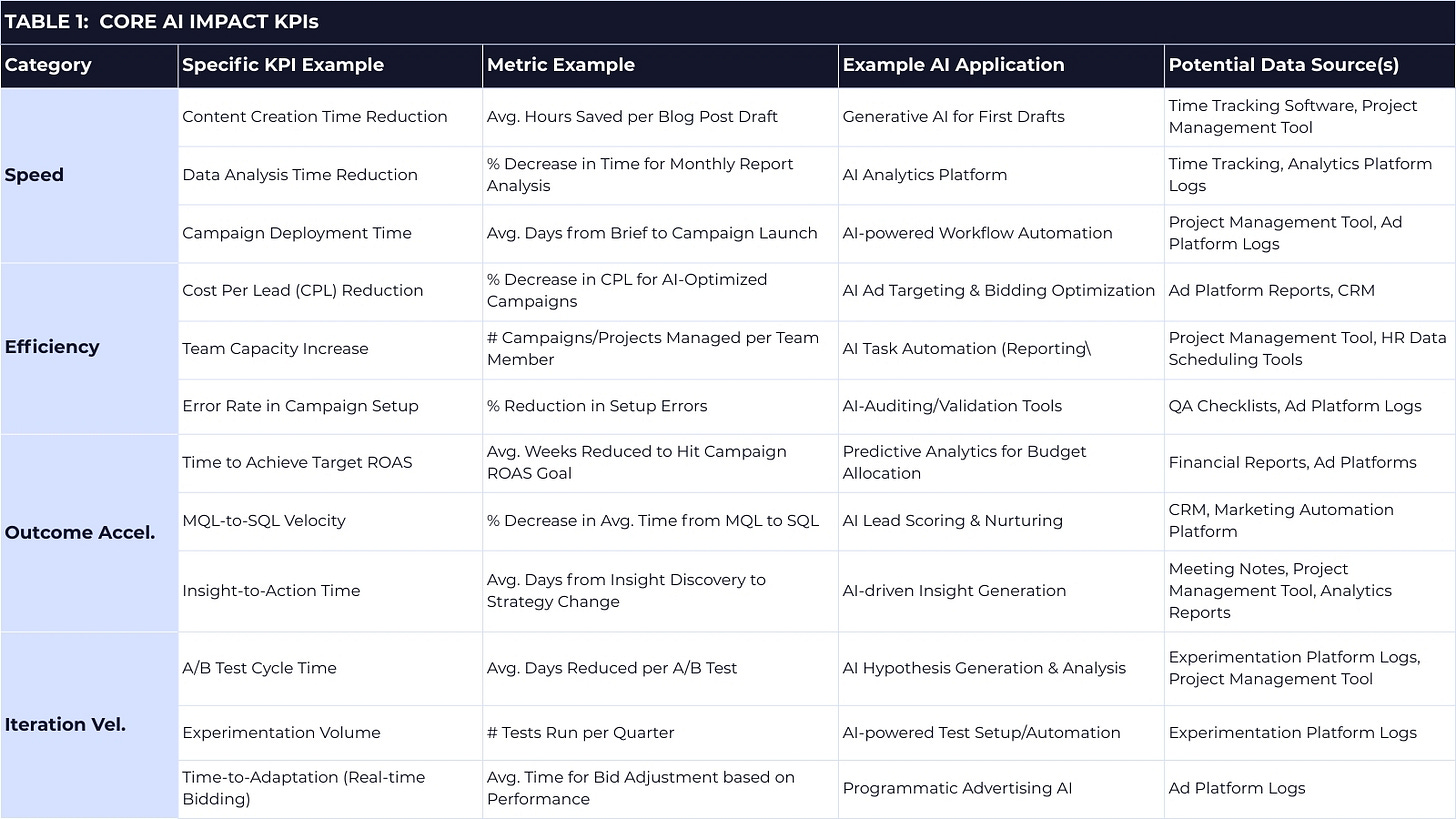

Here’s a menu of potential metrics I’m thinking of for my AI Velocity Framework, geared for the modern marketing grind:

Speed Metrics (How Much Faster?):

Task Time Slashed: How much time am I saving on specific, repeatable tasks? Think: Hours saved drafting blog posts, time cut generating ad variations, less time analyzing campaign data, or faster report building. I will then track time before and after AI.

Workflow Velocity: How much faster are our core processes end-to-end? Examples: Brief-to-live campaign time, total content production time, or the lag between finding an insight and acting on it.

Output Cadence: This is an easy one. Are we launching more campaigns, publishing more content, or sending more emails per week/month?

Time-to-Insight: How much quicker can you pull actionable insights from data using AI tools?

System Response Time (Latency): This is a tough one but I’ll add it anyway. If we're using real-time AI (like chatbots), how fast do they respond (milliseconds)? Slow AI kills user experience.

Efficiency Metrics (Doing More with Less?):

Cost Savings: Where are the dollars dropping? Cost per campaign/project, per lead, per deliverable? Pinpoint savings from less external support or consolidating tools.

Team Power-Up (Resource Utilization): Is your team handling more campaigns/projects per person? Are they spending less time on grunt work and more on strategy, creativity, or stakeholder management?

Output Volume: Are we producing more stuff with the same resources? More social posts, more A/B tests, more personalized emails?

Error Reduction: Are fewer mistakes happening in campaign setup, data entry, or reporting thanks to AI checks or automation?

Throughput (for Agents and Automation): For automated flows (like lead routing), how many tasks are getting done per hour/day?

Outcome Acceleration Metrics (Winning Faster?):

Goal Achievement Speed: How much faster are we hitting specific campaign goals (e.g., time to hit target lead volume, CPA, or ROAS)? Or internal goals like target pipeline value?

Conversion Velocity: Is the customer journey speeding up? Shorter time from lead to MQL/SQL/conversion? Faster sales cycles?

Performance Lift Speed: How quickly do AI optimizations show results? (e.g., time to see a 15% conversion lift after AI personalization).

Insight Velocity & Impact: How fast does AI generate key insights, AND how quickly do those insights lead to actual strategy changes or campaign pivots?

SEO Ranking Speed: Is AI-optimized content hitting target rankings faster than our old methods?

Iteration Velocity Metrics (Learning Faster?):

Experiment Cycle Time: How much faster can we design, run, and analyze A/B tests with AI's help? Creative iterations, etc…

Experiment Volume: Are we running more tests per campaign or time period because AI speeds things up?

Optimization Speed: How much faster do AI methods of optimization find the winning variation compared to traditional A/B tests? Measure faster convergence or reduced 'cost' of showing bad variations.

Adaptation Speed: How quickly are campaigns, bids, or creative adjusted based on real-time data and AI recommendations?

Qualitative Metrics (The "Feel" Factor):

Numbers don't tell the whole story. Capture the fuzzier stuff too:

Team Capacity & Focus: Ask your team: Do they feel less bogged down? Are they doing more interesting work?

Morale Boost: Is automating tedious tasks making the team happier or less burnt out?

Innovation Spark: Are new services, campaign ideas, or analysis methods popping up thanks to AI?

Stakeholder/Client Happiness: Are client satisfaction scores (NPS, CSAT), internal stakeholder feedback, or retention rates improving in correlation with your AI efforts?

Table 1: Your Core AI Impact KPIs

The Rules of the Game

Applying all this will take rigor and consistency. But it will also take a bit of spatial thinking. Here’s some rule’s I’ll apply to my testing:

Get Granular: Measuring "average time saved across the department" is okay, but the real insights are in the details. Marketing teams juggle tons of different tasks. I’ll need to track savings per specific task (like content drafting 1 or data analysis), per deliverable type, or even per campaign. This pinpoints where AI is truly shining and helping me focus my efforts. I think I’ll pick 4-5 data points to track first (probably the easiest ones) and move from there once I get some data.

Connect the Dots: Faster A/B testing is cool, but does it actually lead to better business results? Maybe, maybe not. Faster testing only matters if your ideas are good and you use the results to improve outcomes like conversion rates or pipeline velocity

My framework must track both the process speed-up (more tests) and the outcome acceleration (hitting higher conversion rates faster). This forces me to ask: are we just spinning wheels faster, or are we actually getting the business to its destination sooner?

Proof in the Pudding: Real Teams, Real AI Wins

Okay, enough theory. Does this stuff actually work in the real world? Absolutely.

Companies and agencies are already using AI to get demonstrably faster, leaner, and achieve outcomes quicker.

We don’t have to look much farther than the AI platform providers to see that companies are already realizing efficiency. Just look at Jasper’s Customer Stories page to see the wins their customers are realizing. Of note, every customer case story listed would rank in my “speed” and “efficiency” categories, but very few are really touting business outcome. At least not overtly.

Vanguard took it a step further when they used AI platform, Persado to create AI-powered tests that resulted in a 16% increase in conversions and an abundance of insights that can be recycled and used in new campaigns. This is closer to what we are talking about here. Use of AI for speed to outcome.

And in my research, people on the ground are seeing it too. As one Redditor put it bluntly: "Digital marketing is basically becoming AI marketing. The way AI can optimize ad performance, test variations automatically, and even tweak messaging mid-campaign is nuts". That certainly captures my excitement around AI's power in core marketing work.

The Big Takeaway: These examples are good starting points but we’re not there yet: A consistent measurement framework on speed, efficiency, and outcome acceleration needs to be more than just academic. Real businesses are going to demand more real and measurable results in these areas. The case studies available today help me to validate that building my own AI Velocity Framework around these core dimensions is needed.

Notice a Pattern? Many wins I am seeing are tied to specific tools: for content, for copy, for journeys, for predictive analytics, for forecasting. Marketing teams (and our Mighty & True team) juggle a whole toolbox of tech, whether in an agency or in-house.

This means you can't just measure "AI" as one big blob. You need to track the impact of each specific tool in your stack. Which ones are pulling their weight? Which ones are driving the most value?

Again, a granular view is essential for smart decisions about what tools to keep, ditch, or integrate deeper.

Dodging the Potholes: Tackling AI Measurement Headaches

So, measuring AI is crucial. But let's be real, it's not going to be easy. Lots of companies are struggling to get real value from their AI spend, and marketers aren't exactly brimming with confidence about tracking things like creative impact.

Using Google Gemini Deep Research, I pulled the top hurdles that marketing leaders are seeing in their AI efforts. I agree with all of them..

Common Marketing Pain Points:

Data Dumpster Fires: "Garbage in, garbage out" is AI's mantra. Marketing teams often deal with data scattered everywhere—different clients, internal tools, marketing platforms. It's messy, inconsistent, incomplete, maybe even trapped in silos. Just getting clean, usable data integrated is a massive first step. Plugging new AI tools into your old systems can also be a technical nightmare.

Who Gets the Credit? (Attribution): Figuring out which specific AI-powered email or ad variation actually led to that sale or influenced pipeline in a complex, multi-channel campaign? Good luck. Old attribution models often fall flat.

Pinpointing exactly how much revenue or pipeline value an AI tool generated is a major headache, especially when it touches multiple parts of the journey. The irony? AI itself might be needed to build better attribution models.

Where Did We Start? (Baselines): You need that "before" picture, but getting clean baseline data can be tough if processes were sloppy or undocumented pre-AI. That 30-day baseline period? Crucial, but requires planning.

Measuring the Fluff (Intangibles & Creative): How do you put a number on "team focuses more on strategy now" or "our brand seems more innovative"? Or quantify exactly how AI improved that killer headline? It's hard, but ignoring these softer benefits misses part of the story.

The Real Cost (Total Cost of Ownership): It's not just the subscription fee. Think implementation, integration, data prep, training time (and cost!), ongoing maintenance, cloud compute costs (hello, "bill shock"!), and even API call fees for LLMs. It all adds up.

Who Knows This Stuff? (Skill Gaps): Many marketing teams just don't have the in-house AI chops to implement, manage, and measure effectively. The training needed is often underestimated.

"But We've Always Done It This Way" (Resistance & Culture): AI often means changing workflows, and change can be scary. Without strong leadership backing (sometimes shaky due to fuzzy ROI or cost fears) and a culture that allows for trial-and-error, AI adoption (and measurement) can stall.

The Ethical Tightrope (Bias, Privacy, Trust): Making sure AI is fair, unbiased, and respects privacy regs (GDPR, CCPA) is non-negotiable. Biased data leads to biased results, wrecking trust and potentially your reputation. AI "hallucinations" (making stuff up) also erode trust. Humans must stay in the loop.

Dashboard Overload (Unifying Reports): Pulling data from all your different AI tools, client platforms, and internal systems into one clear picture? Still a massive challenge for many, making a holistic view elusive.

So should we just give up?

Hell no. These challenges may seem insurmountable but with time, they can be solved. We are spending time:

Data: Cleaning house before we scale AI. Invest in data management. Start measuring with what we have, even if it's messy, and improve over time.

Attribution: We’re looking into AI-powered or multi-touch models. Accept imperfection initially; focus on trends.

Baselines: Be disciplined. Document our starting point clearly.

Intangibles: Use surveys, interviews, and sentiment analysis to capture the qualitative side.

Costs: Track everything that matters. Get our finance involved early. Scrutinize tool pricing.

Skills: Invest in training. Start with user-friendly tools or outsource complex stuff initially. We’re starting with Skillshare + targeted YouTube sessions and moving on from there.

Change: Get leadership buy-in with clear goals (even estimated ROI helps). Foster experimentation. Pilot projects build momentum. This one is easy. I’m bought in already, but skeptical around tools and cost + wary of our tech exploding over time.

Ethics: We need to be transparent with clients and stakeholders. We already have strong governance, ethical rules, content filters, fail-safes, and human oversight. Check out our AI policy here.

Reporting: We’re beginning to design some new dashboards that can pull data together for a semi-unified view. We aren’t going to let the tech destroy progress though. We’re moving fast.

The Real Roadblocks:

Often, the biggest hurdles aren't fancy metrics. It's the basics: messy data, no clear strategy for AI, forgetting to set baselines, or a team culture that resists change. You can have the best framework on paper, but it won't work if these fundamentals are broken. So, job #1 is often strengthening these basics: data hygiene, clear goals, baseline discipline, and an adaptive culture.

Ethics = Good Measurement:

Don't treat ethics like a separate checkbox. Privacy concerns, bias, lack of transparency, these aren't just risks, they mess up your measurements. Biased data means biased AI, which means skewed, unreliable metrics.

Privacy rules limit the data you can even use. Tackling ethics head-on isn't just about doing the right thing; it's essential for making sure your measurements are accurate, trustworthy, and legal.

The Action Plan: Building My Company's AI Velocity Framework

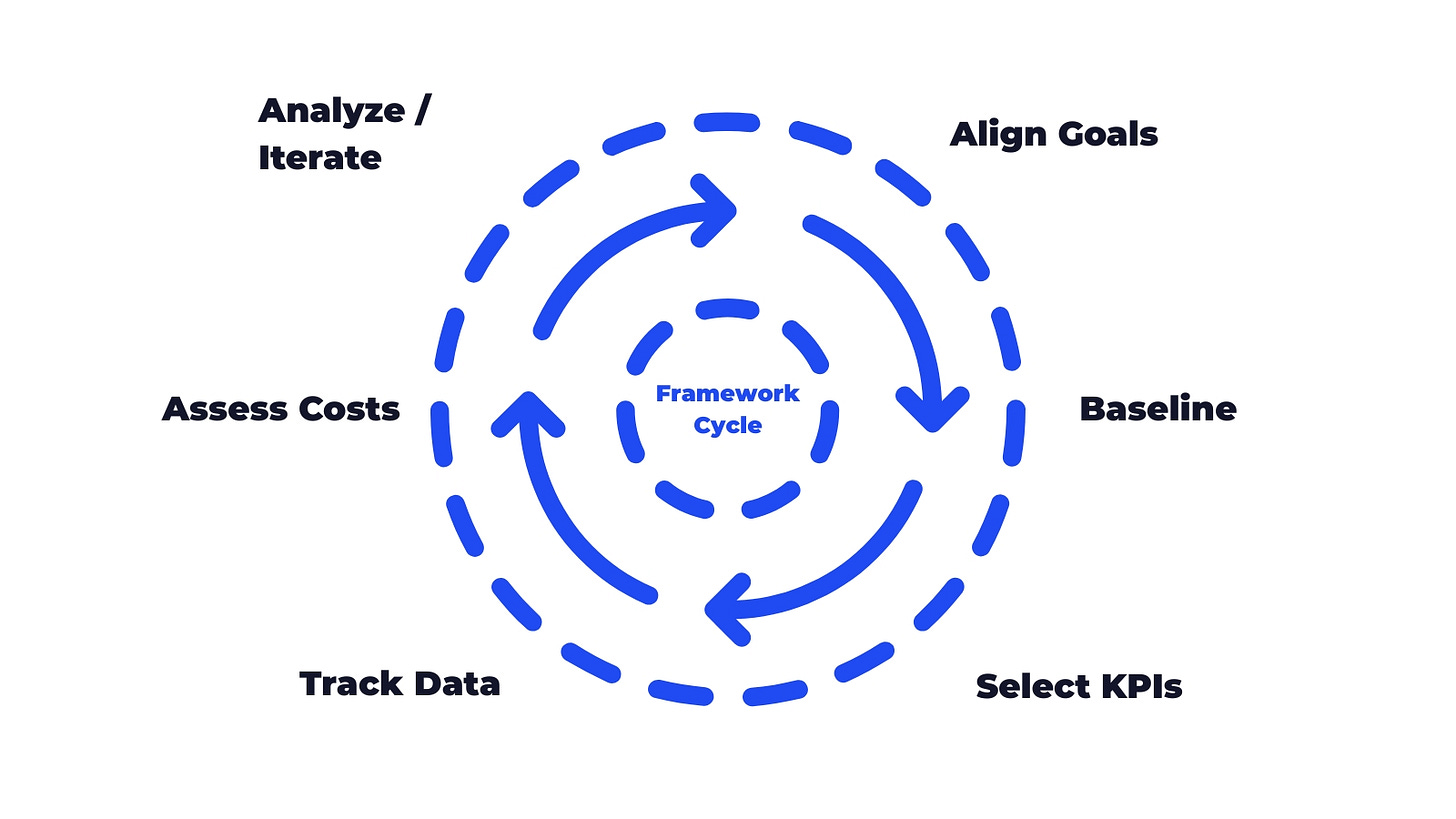

Okay, let's build this thing. Creating The AI Velocity Framework won’t be a one-and-done task; it will be a living, breathing process. As AI changes and our business evolves, the framework needs to keep up. I’m think of it like building a flywheel: define the core components, get them spinning, and continuously optimize.

Here’s a step-by-step guide tailored for what we’re building:

Step 1: Define Your North Star (Align Goals with Business Wins)

Action: Get crystal clear: What specific business problems will AI solve? What opportunities will it unlock? Crucially, we need to tie these directly to our big-picture goals, whether that's agency growth and client success, or internal company objectives like pipeline growth, market share, or customer lifetime value. Don't just "use AI"; use AI to achieve something specific.

Think: Use SMART criteria. Remember the different flavors of ROI: Measurable (direct impact), Strategic (long-term game), and Capability (getting smarter with AI). What does success look like, and how will you know when you get there?

Example: Instead of "Implement AI for lead gen," aim for "Lift MQL-to-SQL velocity by 20% via AI lead scoring within 6 months."

Step 2: Know Your Starting Line (Establish Baselines)

Action: Before we flip the switch on an AI tool or process, we’ll measure current performance using the KPIs we'll pick next. Tracking over a solid period (30 days, last quarter, previous campaign). We’ll document this 'status quo' meticulously.

Think: This isn't glamorous, but it's non-negotiable. Without a clear "before," you can't prove the "after." Get the cleanest data possible.

Example: Measure a customer’s average MQL-to-SAL time for the quarter before implementing AI lead scoring.

Step 3: Pick Your Gauges (Select Core KPIs)

Action: We’ll choose a manageable set of KPIs from Section 3 (Speed, Efficiency, Outcome Acceleration, Iteration Velocity) that directly track progress towards Step 1's goals. I’m thinking four at the max. We’ll make sure to tailor them to our context.

Think: The key will be to balance hard numbers (quantitative) with feedback (qualitative). We don’t want to drown in data; but focus on the vital few metrics that truly show impact. What are the 3-5 most critical indicators of success for this specific AI initiative?

Example: Task Efficiency: Before and after time to complete a task (Outcome Efficiency - Quantitative ), Creative Team Feedback Score (Qualitative).

Step 4: Build the Engine (Implement Tracking & Data Collection)

Action: We’ll need the tools and processes in place to capture KPI data consistently and accurately. We have a few core ones. Asana, Ad Platforms, Team Surveys. We also need to make sure our AI tools themselves are set up to be tracked! Address data quality and integration head-on.

Think: How will we get the data? Use what we have (CRM, analytics, project tools). Automate collection where possible. This is where data silos can kill our progress.

Example: Ensure Asana accurately tracks hours per task accurately; Make sure we can track a before and after metric; integrate AI analysis of data; set up regular surveys for team feedback.

Step 5: Count the Fuel (Factor in ALL Costs)

Action: We use Everhour for tracking time. We’ll need to track every cost associated with our AI push. This is the Total Cost of Ownership (TCO).

Think: It's more than just software licenses. We need a light analysis of all the costs including implementation, integration, training (time + expenses!), maintenance, infrastructure (compute, storage, API calls!), specialized staff. We need an honest swag about the investment.

Example: Keep a running tally of monthly SaaS fees, estimated internal hours for integration & training, and direct API costs.

Step 6: Tune the Engine (Analyze, Report, Repeat)

Action: We’ll regularly (likely per task, campaign and monthly) crunch the KPI data. Calculate ROI (Simple ROI = (Net Return - Cost) / Cost * 100%. Compare to our baseline.

Think: This is the learning loop. What's working? What's not? We’ll need to generate clear reports for our leadership team. Using insights to tweak AI use, adjust campaigns, retrain models, and refine The AI Velocity Framework itself. We should all remember the AI learning curve; performance might improve over time.

The AI Velocity Framework Cycle:

Think of these steps not as linear, but as a continuous cycle:

Align Goals: What business outcome are we driving?

Baseline: Where are we now?

Select KPIs: How will we measure progress?

Track Data: Gather the evidence.

Assess Costs: What's the investment?

Analyze & Iterate: What did we learn? How do we improve? (This feeds back into Goal Alignment for the next cycle).

Example: Produce a quarterly "AI Impact Snapshot": MQL velocity change (vs. baseline), conversion rate impact, team feedback trends, calculated ROI. Use it to decide: refine the scoring model, expand usage, or pivot?

Remember: This Isn't Set in Stone. AI moves fast. This framework needs to keep pace. We’ll likely review it quarterly or yearly. Are there new AI tricks? Have our business or client goals shifted? Is our team getting savvier with AI? Adapt accordingly.

Pro Tip: Measure Getting Smarter. Especially early on, track "Capability ROI." Skill gaps are real, and initial projects are often learning labs. So, I’d suggest formally measuring if your team is getting better with AI tools, if AI is truly baked into workflows, or if you've developed new AI-powered processes. This captures crucial value beyond just the immediate dollars and cents.

7. The Bottom Line: Measure Now, Win Later

Look, the AI siren song is strong in the marketing world.

Speed, efficiency, results, it sounds amazing. But swapping wishful thinking for real results demands getting serious about measurement.

Quantifying AI's velocity using a structured approach like The AI Velocity Framework isn't just nice-to-have anymore. It's how you justify spend, sharpen your strategy, and prove your worth, whether to clients or the C-suite.

Building this framework means linking AI to real goals, setting baselines, picking smart KPIs (speed, efficiency, outcomes, iteration), and tracking the good (benefits) and the bad (costs). It means facing the headaches: data chaos, attribution nightmares, ethical tightropes, getting the team on board.

This blueprint gives you a starting point. Don't wait for perfection. Start measuring now, even if it's messy. Learn, adapt, iterate. Your framework should evolve with the tech and your business needs.

Mastering AI measurement isn't just about reports; it's about building a strategic muscle. Marketing leaders who can clearly show the momentum AI brings, to their own operations and to business results, are setting themselves up for growth, stronger stakeholder relationships, and a serious edge in an AI-fueled future.

Measure today to build a smarter, faster, more successful marketing function tomorrow. Make AI deliver on its promise, but do it smartly and responsibly.

I’ll make sure I post our results as we get them in the next 30-60 days. In the meantime, if you want to talk through any of your ideas on this topic, hit me up in the chat or keep track of us at www.mightyandtrue.com.